Sakarya Vallis

Immersive virtual environment

2020

Original Mars photographs: HiRise, Lunar and Planetary Laboratory, Universite of Arizona

Heightmap created via the ASP Stereo Pipeline tools, NASA Ames Research Centre

3d Model created in Blender

Immersive Environment created in Unreal Engine

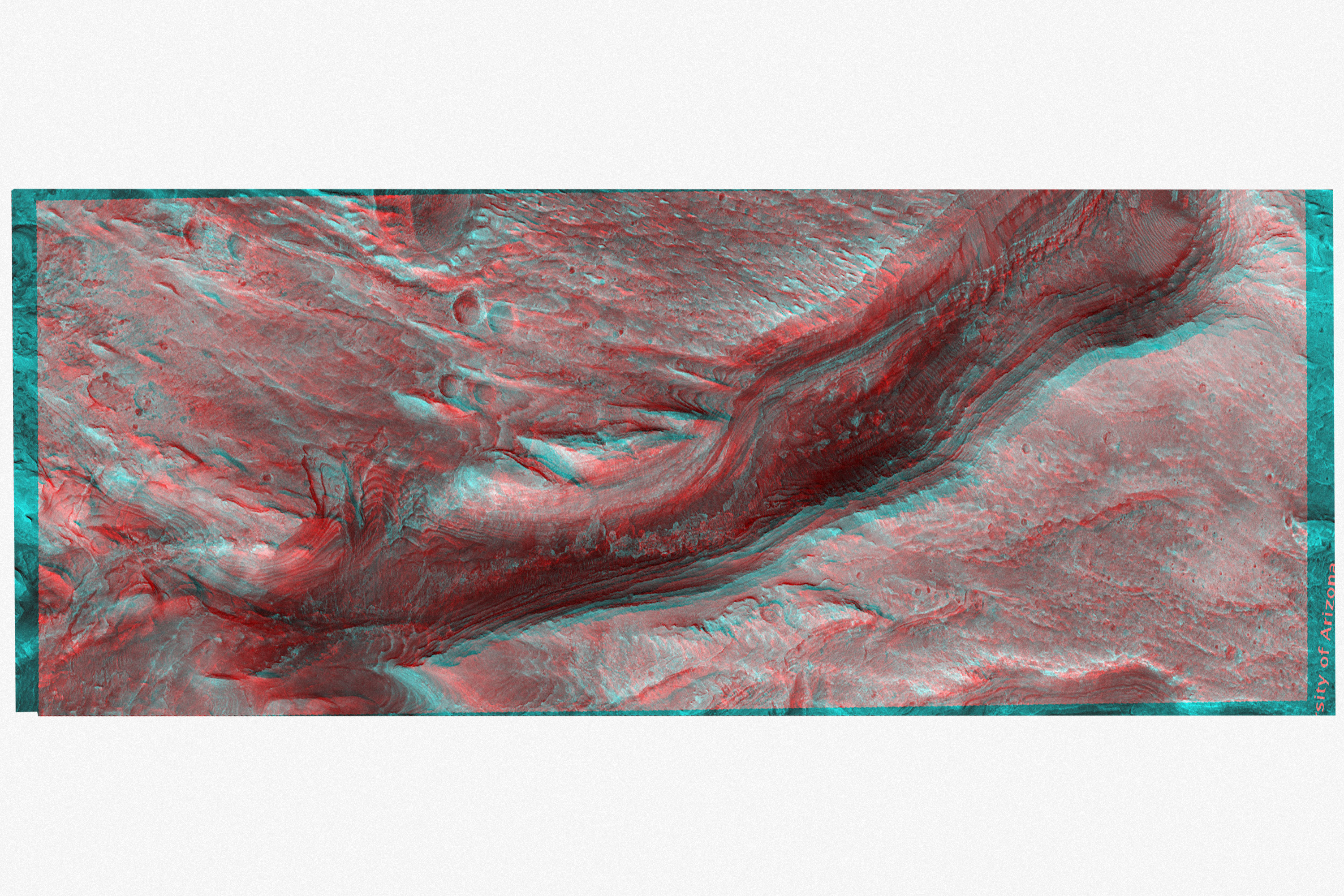

Immersive 3D reconstruction of ‘Sakarya Vallis’, a chasm in the Interior Mound of Gale Crater on the surface of the planet Mars.

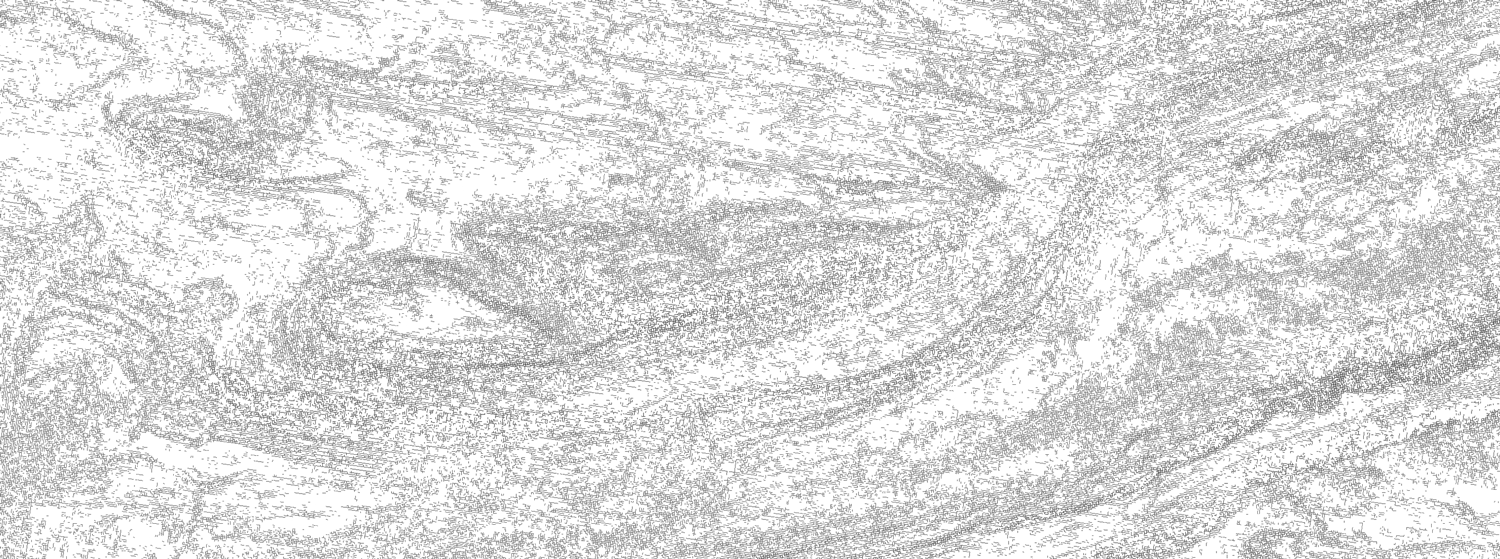

High contrast topology

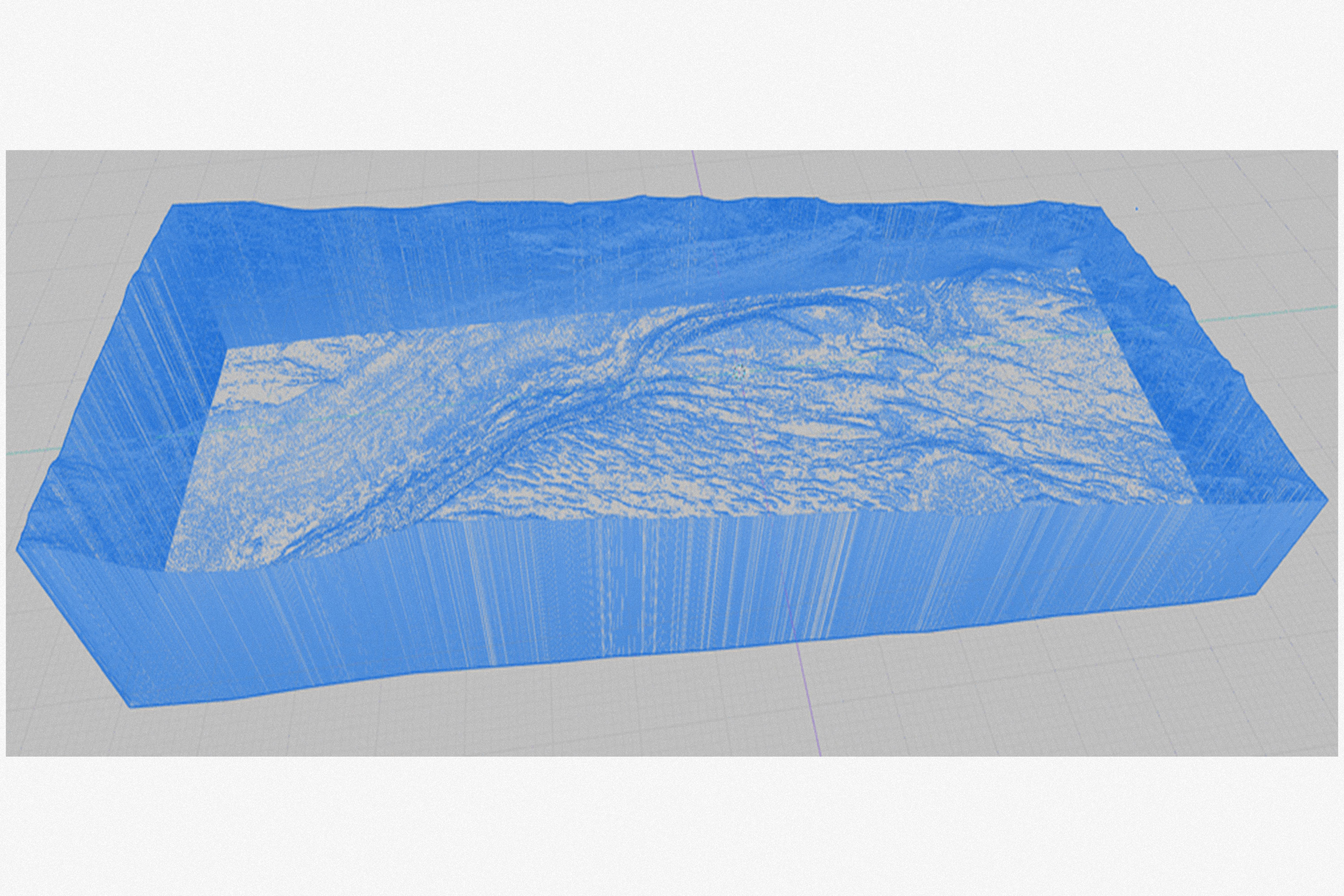

3D Mesh, Blender

3D Mesh, Blender

VR Immersive environment, Unreal Engine.

VR Immersive environment, Unreal Engine.This project got initiated out of curiosity triggered by an image of a ‘chasm in the inner mound of Gale Crater’ (see top image). The caption described the feature as ‘a chasm that may actually be classified as a canyon, which is specifically a chasm or gorge that was carved by running water.’ Thus an ancient riverbed.

But how would past martian or future human inhabitants experience this environment? Would it look or feel familiar to us? [ ... ]

This project has three scopes:

But how would past martian or future human inhabitants experience this environment? Would it look or feel familiar to us? [ ... ]

This project has three scopes:

- to accurately digitally reconstruct the terrain features (ongoing),

-

to explore the human experience of this particular environment (to be started),

- to investigate a speculative future use case of this area on a terraformed martian surface (to be started).

Digital reconstruction:

The reconstruction starts with a pair of stereoscopic orthoimages acquired by the HiRise camera onboard the Mars Reconnaissance Orbiter (MRO) at around 270km above the martian surface. While flying overhead, the spacecraft’s camera takes two images at different intervals and slightly different locations of the same surface feature.

![]()

![]()

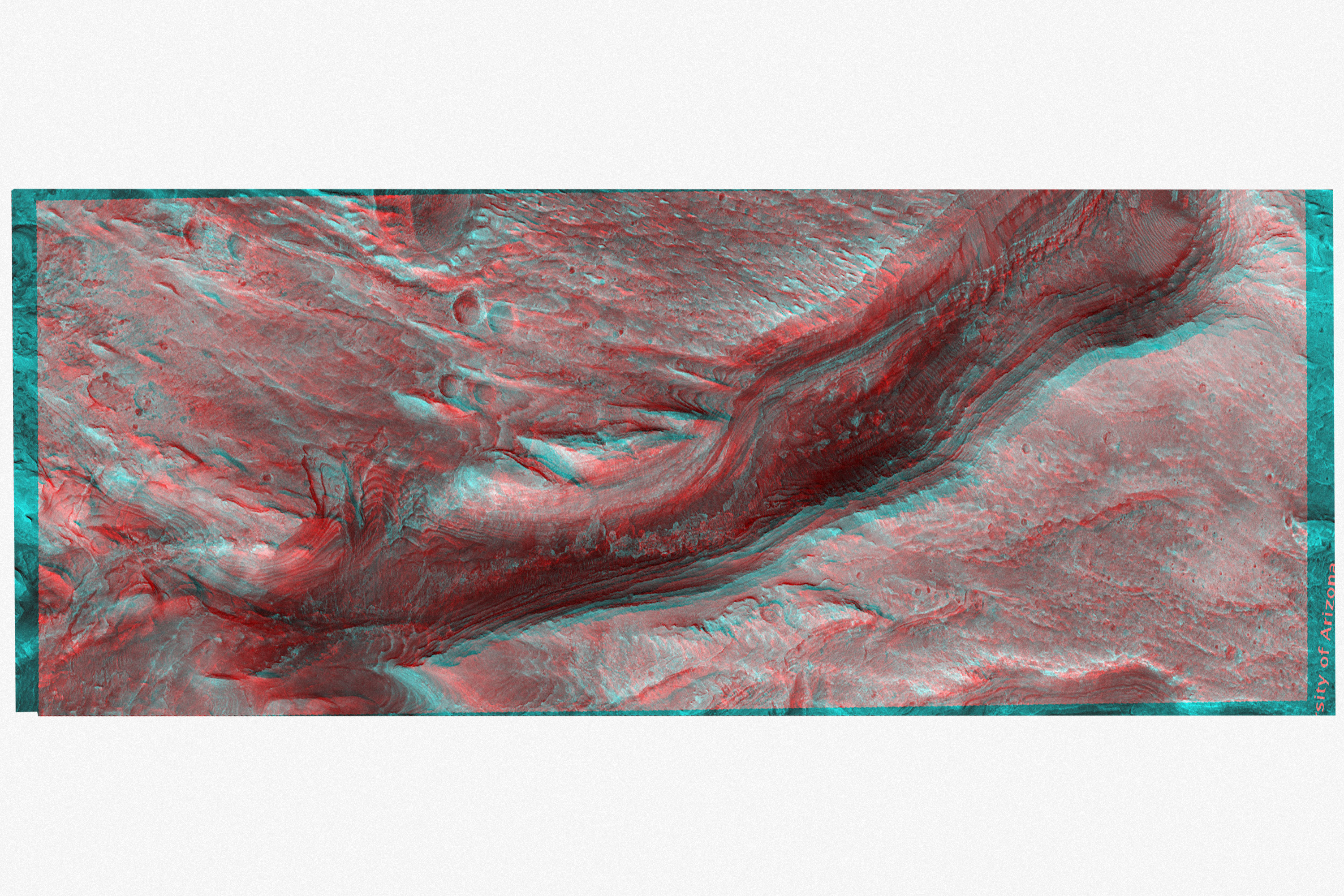

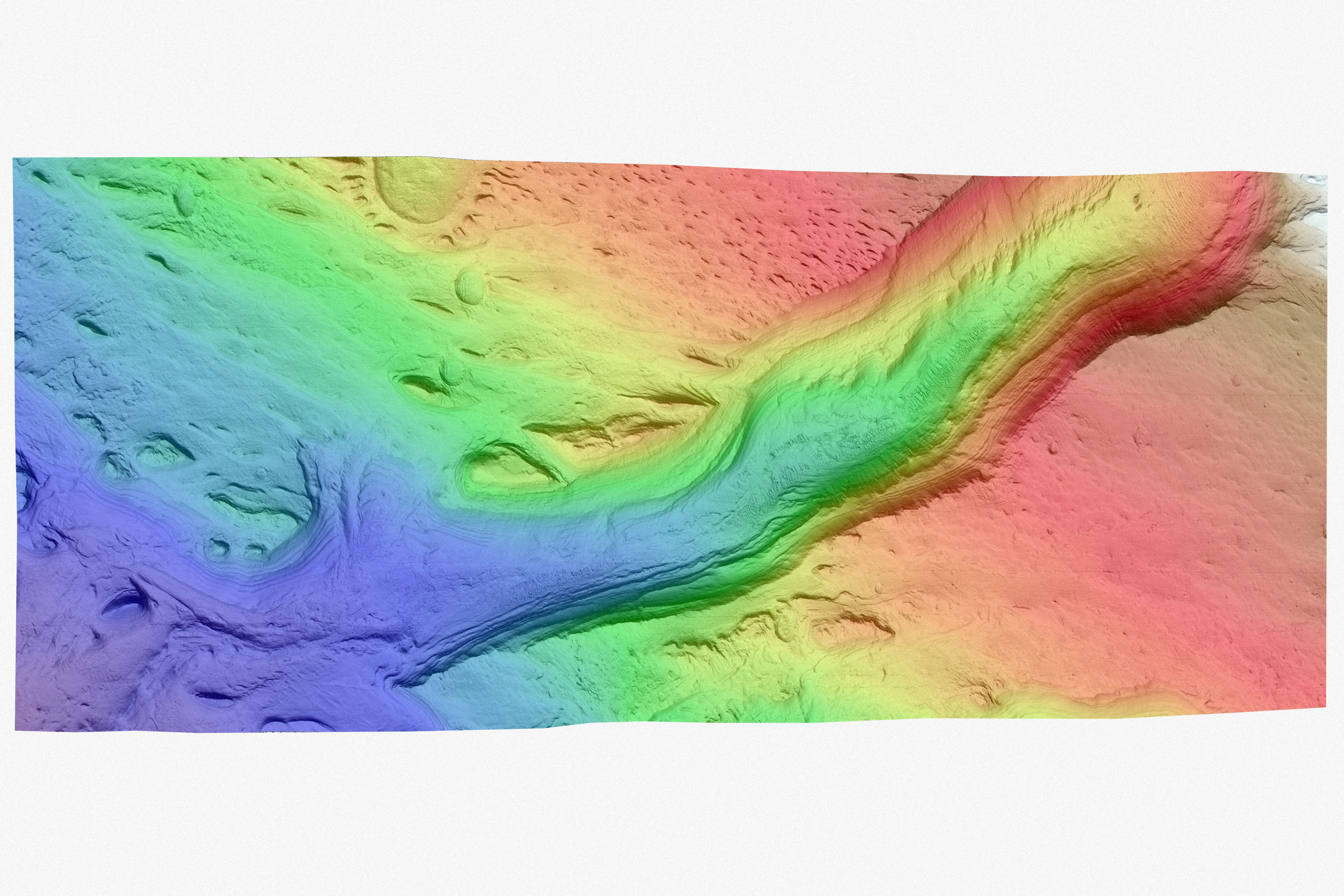

By interfering these two images, a stereoscopic image can be created that contains depth information and forms the basis for further development.

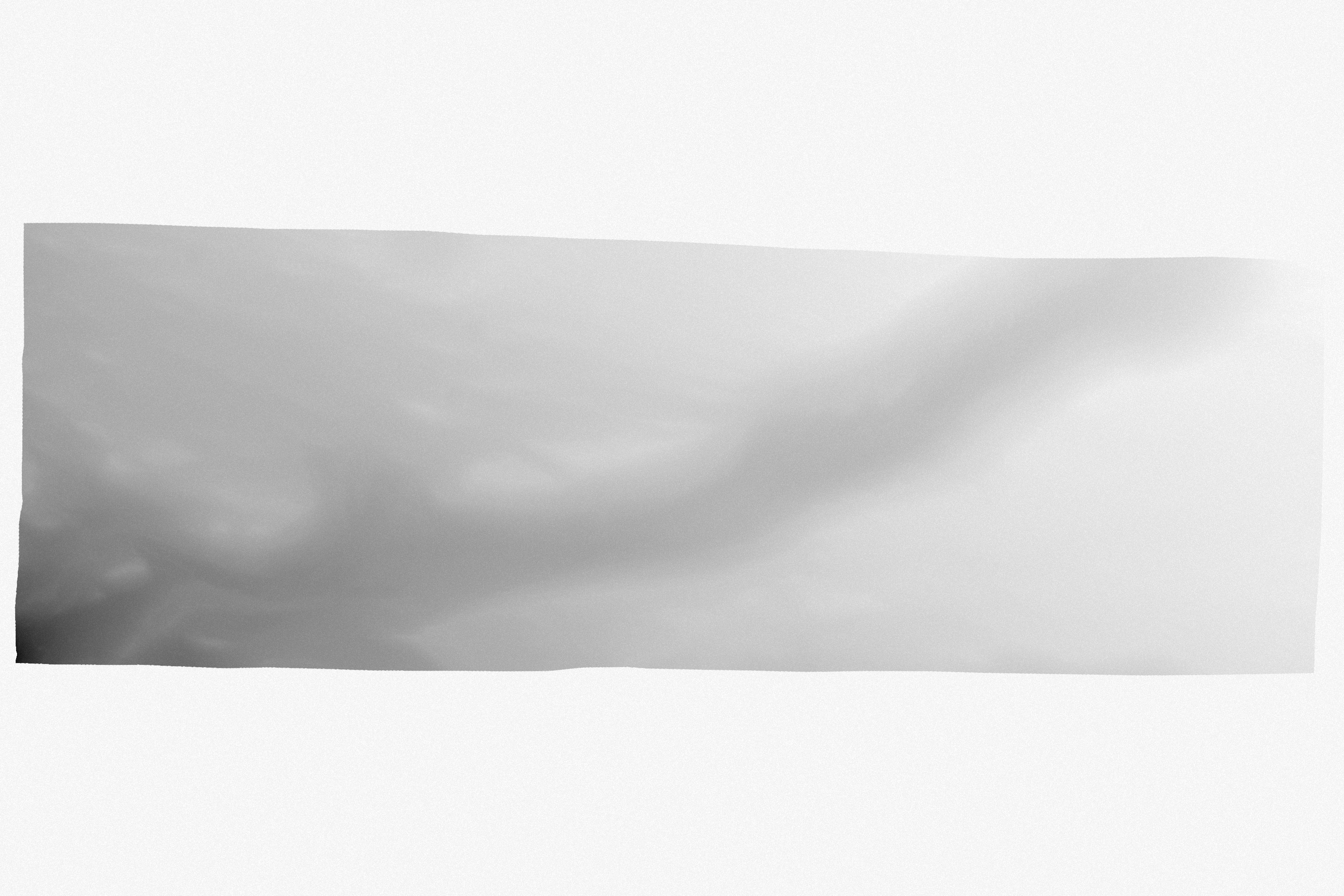

In this case, I acquired the images via the University of Arizona, and did the stereoscopic processing through the NASA stereo pipeline as developed by NASA Ames Research Centre. The final result is a high-resolution greyscale depth map image with a surface altitude and feature accuracy of around 50cm per pixel.

![]()

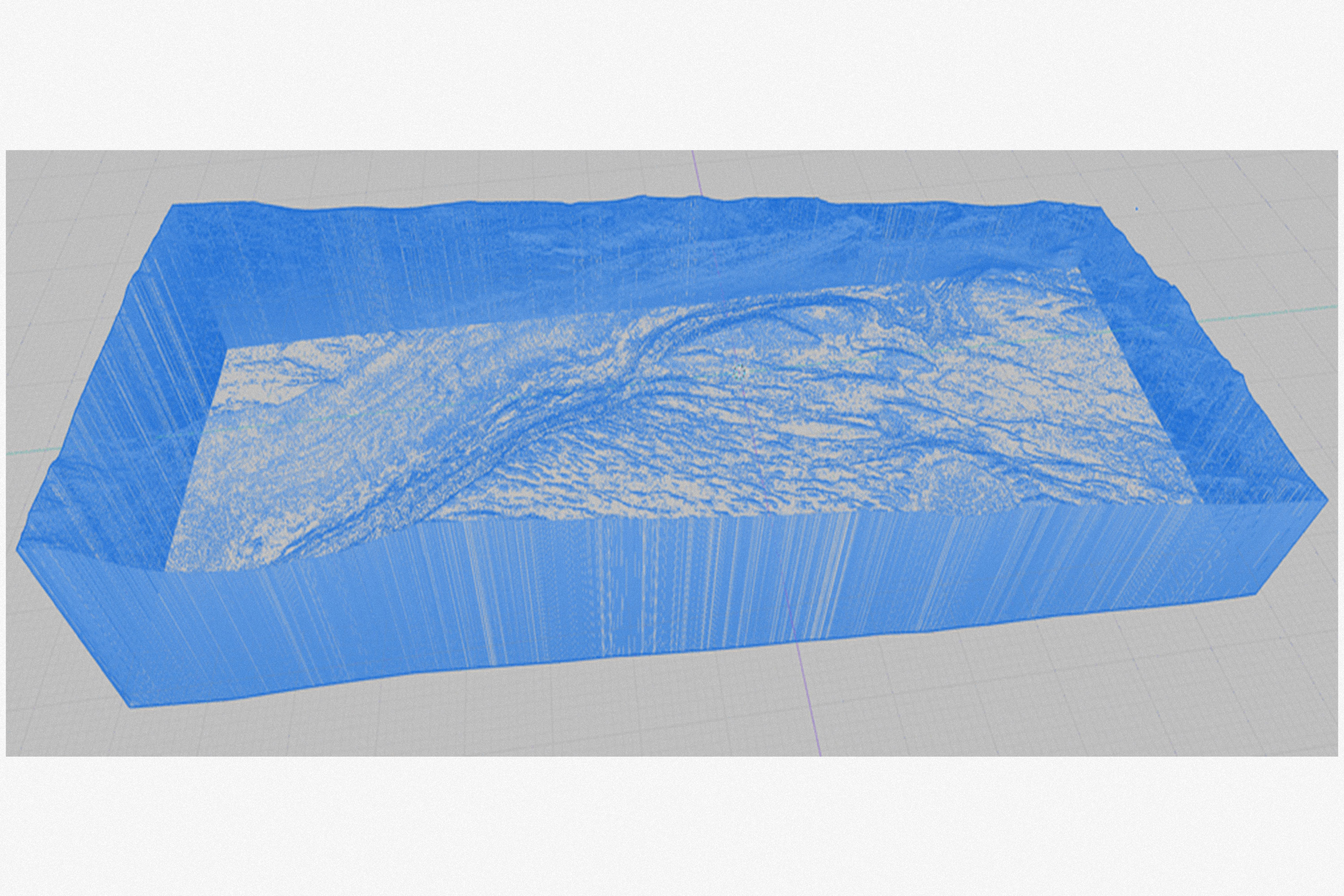

The depth map is imported in the Blender 3d modeling software and converted to an actual 3D terrain model of about 5 x 15 km.

![]()

This 3d model is then injected and textured in a game engine, recreating a relatively true-scale replica of this martian terrain feature which a player can freely explore on-screen and in virtual reality.

To do:

The reconstruction starts with a pair of stereoscopic orthoimages acquired by the HiRise camera onboard the Mars Reconnaissance Orbiter (MRO) at around 270km above the martian surface. While flying overhead, the spacecraft’s camera takes two images at different intervals and slightly different locations of the same surface feature.

By interfering these two images, a stereoscopic image can be created that contains depth information and forms the basis for further development.

In this case, I acquired the images via the University of Arizona, and did the stereoscopic processing through the NASA stereo pipeline as developed by NASA Ames Research Centre. The final result is a high-resolution greyscale depth map image with a surface altitude and feature accuracy of around 50cm per pixel.

The depth map is imported in the Blender 3d modeling software and converted to an actual 3D terrain model of about 5 x 15 km.

This 3d model is then injected and textured in a game engine, recreating a relatively true-scale replica of this martian terrain feature which a player can freely explore on-screen and in virtual reality.

To do:

- optimizing the texturing of the landscape,

- starting phase two and three.

VR walktrough, Oculus Quest 2

VR walktrough, Oculus Quest 2︎︎︎ Home